The ZLST Lab

The ZLST Lab is affiliated with Computer Science, Zhejiang University. The lab is led by Professor Chun Chen, focusing on cutting-edge research in Big Data and Artificial Intelligence, particularly in Recommender Systems, Graph Mining, Diffusion Models, Knowledge Distillation, and Large Language Models, etc. Our team has been honored with five provincial/ministerial-level science and technology awards, and five best paper awards at top international academic conferences. We look forward to contributing to the field of artificial intelligence in the era of large models!

Primary Research Interests

Recommendation systems

Recommender systems (RS) are technologies that utilize user behavior and content characteristics to predict user preferences and provide personalized recommendations, serving as core infrastructure for e-commerce and social media platforms. We are devoted to cutting-edge research topics including trustworthy recommendation, sequential recommendation, theoretical foundations of Recommender Systems, and LLM-enhanced recommendation paradigms.

Graph mining

Graph data mining aims to extract the underlying relationships and patterns between entities from various graph-structured data, which has many real-world applications such as social network analysis, bioinformatics and financial fraud detection. We are committed to cutting-edge research topics including graph transformer, graph foundation models, graph structure learning, graph query and LLM-empowered graph models.

Diffusion Models

Diffusion Models are a class of generative models that have gained significant attention in recent years, particularly in the fields of computer vision and natural language processing. By establishing a mapping from noise distribution to data distribution, Diffusion Models can generate high-quality data such as images, videos, audios and text. We are devoted to provide a deeper understanding of the generaion dynamics of Diffusion Models and the acceleration of their sampling processes.

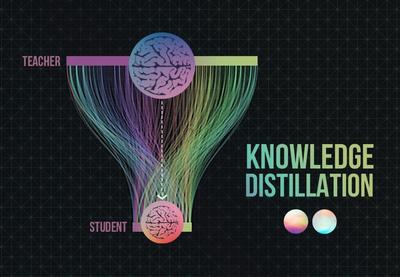

Knowledge distillation

Knowledge distillation aims to achieve efficient transfer of knowledge from complex models to lightweight models. The goal is to balance the inference cost and performance of compact models, facilitating the deployment of intelligent models in resource-constrained scenarios such as edge computing and mobile devices.

Latest Preprints

(2025).

Lie Symmetry Net: Preserving Conservation Laws in Modelling Financial Market Dynamics via Differential Equations.

In TMLR 2025.

(2025).

Rankformer: A Graph Transformer for Recommendation based on Ranking Objective.

In WWW 2025.

(2025).

DICE: Data Influence Cascade in Decentralized Learning.

In ICLR 2025.

(2025).

Dynamic Graph Transformer with Correlated Spatial-Temporal Positional Encoding.

In WSDM 2025.

(2025).

MSL: Not All Tokens Are What You Need for Tuning LLM as a Recommender.

In SIGIR 2025.